Description:

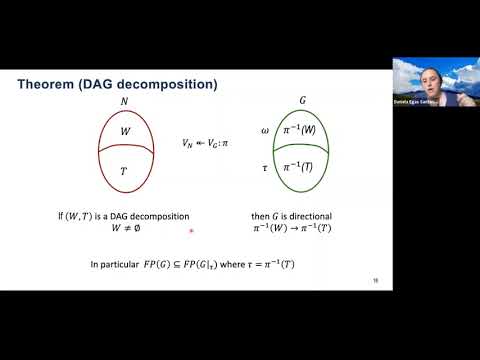

Explore the relationship between network connectivity and neural activity in this 54-minute lecture on nerve theorems for fixed points of neural networks. Delve into the world of threshold linear networks (TLNs) and combinatorial threshold-linear networks (CTLNs), examining how graph structure influences network dynamics. Learn about a novel method of covering CTLN graphs with smaller directional graphs and discover how the nerve of the cover provides insights into fixed points. Understand the power of three "nerve theorems" in constraining network fixed points and effectively reducing the dimensionality of CTLN dynamical systems. Follow along as the speaker illustrates these concepts with examples, including DAG decompositions, cycle nerves, and grid graphs. Gain valuable insights into computational neuroscience and applied algebraic topology as you uncover the intricate connections between graph theory and neural network behavior.

Nerve Theorems for Fixed Points of Neural Networks

Add to list

#Mathematics

#Graph Theory

#Computer Science

#Artificial Intelligence

#Neural Networks

#Science

#Biology

#Neuroscience

#Computational Neuroscience

#Computer Networking

#Network Engineering