Description:

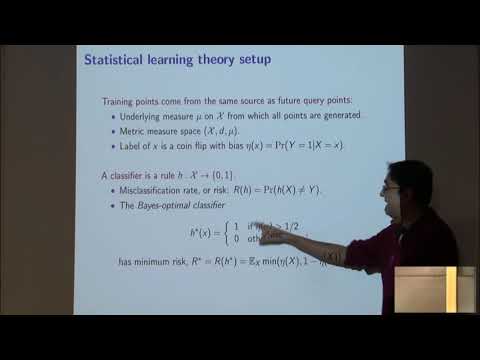

Explore the convergence of nearest neighbor classification in this 49-minute Members' Seminar presented by Sanjoy Dasgupta from the University of California, San Diego. Delve into the nonparametric estimator, statistical learning theory setup, and consistency results under continuity. Examine universal consistency in RPA and metric spaces, smoothness and margin conditions, and accurate rates of convergence. Investigate tradeoffs in choosing k, adaptive NN classifiers, and nonparametric notions of margin. Conclude with open problems in the field of nearest neighbor classification.

Convergence of Nearest Neighbor Classification - Sanjoy Dasgupta

Add to list