Description:

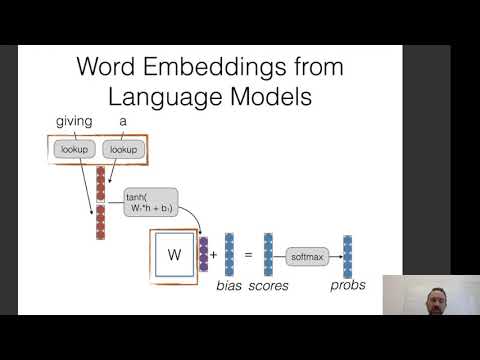

Learn about distributional semantics and word vectors in this comprehensive lecture from CMU's Neural Networks for NLP course. Explore techniques for describing words by their context, including counting and prediction methods. Dive into skip-grams, continuous bag-of-words (CBOW), and advanced word vector approaches. Discover methods for evaluating and visualizing word vectors, and gain insights into their limitations and applications. Examine topics such as contextualization, WordNet, GloVe, intrinsic and extrinsic evaluation, pre-trained embeddings, and techniques for improving embeddings like retrofitting and de-biasing.

Neural Nets for NLP 2021 - Distributional Semantics and Word Vectors

Add to list

#Computer Science

#Artificial Intelligence

#Neural Networks

#Natural Language Processing (NLP)

#Machine Learning

#Word Embeddings

#Language Models

#Word Vectors