Description:

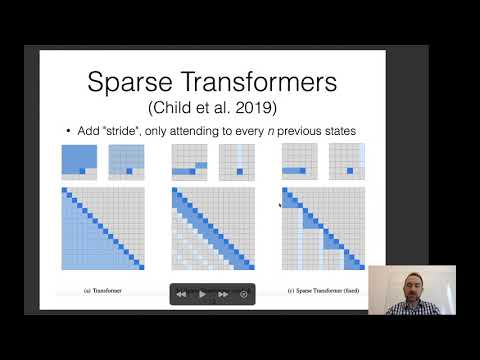

Learn about document-level natural language processing tasks and models in this lecture from CMU's Neural Networks for NLP course. Explore techniques for handling long documents, including sentence-level and document-level tasks, entity coreference resolution, and discourse parsing. Examine approaches like infinitely passing state, separate encoding for document context, and self-attention across sentences. Dive into specific models such as Transformer-XL, Adaptive Span Transformers, and Reformer. Understand the components and challenges of coreference resolution, including mention detection and different modeling approaches. Discover how neural network models can be applied to coreference tasks and discourse parsing. Gain insights into evaluating document-level models and leveraging discourse structure in neural approaches.

Neural Nets for NLP 2021 - Document-Level Models

Add to list

#Computer Science

#Artificial Intelligence

#Neural Networks

#Natural Language Processing (NLP)

#Machine Learning

#Transformers

#Deep Learning

#Self-Attention