Description:

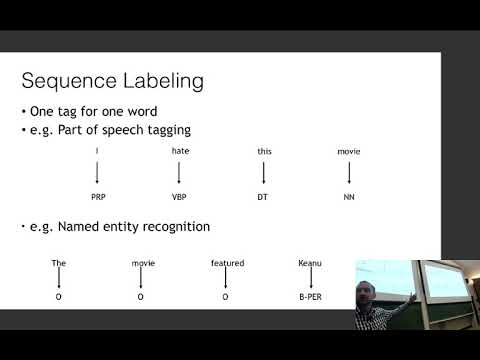

Explore structured prediction in natural language processing through a comprehensive lecture covering the fundamentals of conditional random fields, local independence assumptions, and their applications. Delve into the importance of modeling output interactions, understand the differences between local and global normalization, and learn about CRF training and decoding techniques. Gain insights into sequence labeling, recurrent decoders, and the calculation of partition functions in this in-depth exploration of advanced NLP concepts.

Neural Nets for NLP 2020 - Structured Prediction with Local Independence Assumptions

Add to list

#Computer Science

#Artificial Intelligence

#Neural Networks

#Natural Language Processing (NLP)

#Programming

#Databases

#Database Design

#Normalization

#Machine Learning

#Sequence Labeling