Description:

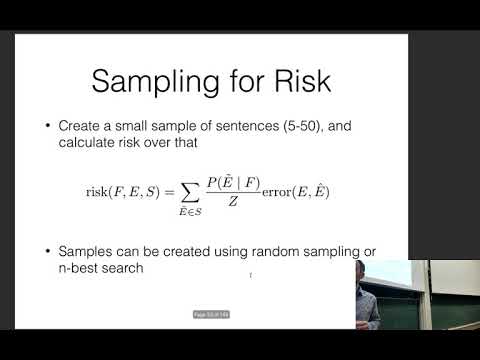

Explore minimum risk training and reinforcement learning in natural language processing through this comprehensive lecture from CMU's Neural Networks for NLP course. Delve into concepts such as error and risk minimization, policy gradient methods, REINFORCE algorithm, and value-based reinforcement learning. Learn about techniques for stabilizing reinforcement learning, including adding baselines and increasing batch sizes. Understand the applications and challenges of reinforcement learning in NLP tasks, and gain insights into when to use these approaches effectively. Discover methods for estimating value functions and addressing problems like exposure bias and disregard for evaluation metrics in neural language models.

Neural Nets for NLP - Minimum Risk Training and Reinforcement Learning

Add to list

#Computer Science

#Artificial Intelligence

#Neural Networks

#Natural Language Processing (NLP)

#Machine Learning

#Reinforcement Learning

#Policy Gradient