Description:

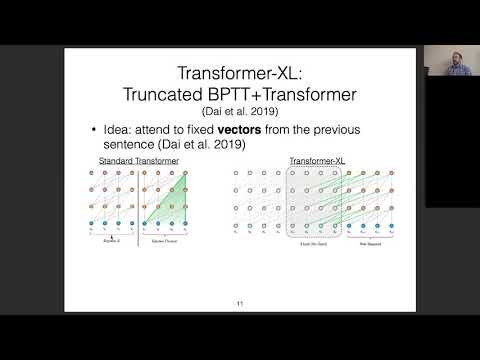

Learn about document-level natural language processing in this lecture from CMU's Neural Networks for NLP course. Explore document-level language modeling techniques, including recurrent networks and self-attention mechanisms. Dive into entity coreference resolution, covering mention detection, mention pair models, and entity-level distributed representations. Examine discourse parsing using attention-based hierarchical neural networks. Gain insights into applying these advanced NLP concepts to tasks spanning multiple sentences and entire documents.

Neural Nets for NLP 2020 - Document Level Models

Add to list

#Computer Science

#Artificial Intelligence

#Neural Networks

#Natural Language Processing (NLP)

#Machine Learning

#Transformers

#Reinforcement Learning

#Deep Reinforcement Learning

#Deep Learning

#Self-Attention