Description:

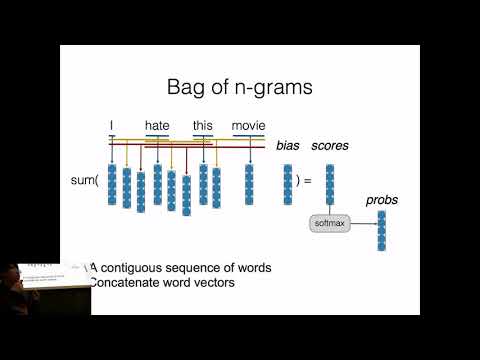

Explore convolutional neural networks for text processing in this comprehensive lecture from CMU's Neural Networks for NLP course. Delve into bag-of-words models, convolution applications for context windows and sentence modeling, advanced techniques like stacked and dilated convolutions, and structured convolution. Examine various applications of convolutional models in natural language processing and consider the inductive biases they introduce. Learn about sentiment classification, continuous bag of words, deep CBOW, and neural sequence models. Understand key concepts such as 2D convolution, stride, padding, multiple filters, and pooling. Discover the architecture of CNN models for NLP tasks, including embedding layers, convolutional layers, pooling layers, and output layers. Investigate specific applications like dynamic filter CNNs, NLP from scratch, and CNN-RNN-CRF models for tagging. Gain insights into the design philosophy and structural biases of convolutional models for text processing.

Read more

Neural Nets for NLP 2020 - Convolutional Neural Networks for Text

Add to list

#Computer Science

#Artificial Intelligence

#Neural Networks

#Natural Language Processing (NLP)

#Machine Learning

#Text Analysis

#Bag of Words