Description:

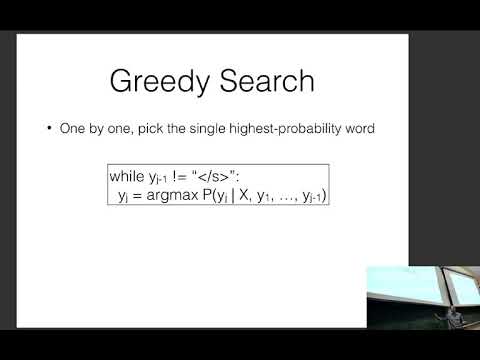

Explore conditioned generation in neural networks for natural language processing through this comprehensive lecture from CMU's CS 11-747 course. Delve into encoder-decoder models, conditional generation techniques, and search algorithms. Examine ensembling methods, evaluation metrics, and various types of data used for conditioning. Learn about language models, including conditional and generative variants, and their applications. Understand the generation problem, sampling methods, and search strategies like greedy and beam search. Investigate log-linear interpolation, stacking, and evaluation paradigms including human evaluation and perplexity. Gain insights into the intricacies of passing hidden states and the differences between linear and log-linear approaches in neural network architectures for NLP tasks.

Neural Nets for NLP 2020: Conditioned Generation

Add to list

#Computer Science

#Artificial Intelligence

#Neural Networks

#Natural Language Processing (NLP)

#Algorithms

#Search Algorithms

#Machine Learning

#Language Models

#Encoder-Decoder Models