Description:

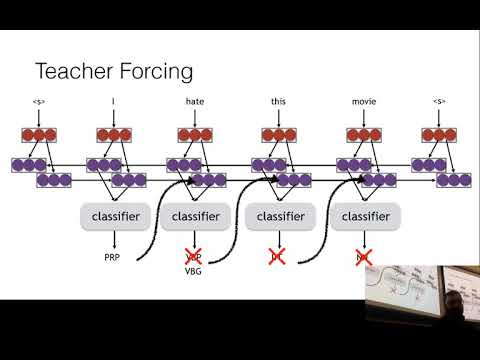

Explore structured prediction with local independence assumptions in this lecture from CMU's Neural Networks for NLP course. Dive into the rationale behind local independence assumptions and gain insights into Conditional Random Fields. Learn about sequence labeling techniques, including BILSTM-CRF models, and understand their training and decoding processes. Discover how to use CNNs for character-level representations and explore various reward functions in structured prediction. Examine methods for minimizing risk through enumeration and sampling, and understand the concept of token-wise minimum risk. Enhance your understanding of advanced NLP techniques and their practical applications in sequence labeling tasks.

Neural Nets for NLP - Structured Prediction with Local Independence Assumptions

Add to list

#Computer Science

#Artificial Intelligence

#Neural Networks

#Natural Language Processing (NLP)

#Convolutional Neural Networks (CNN)

#Machine Learning

#Sequence Labeling