Description:

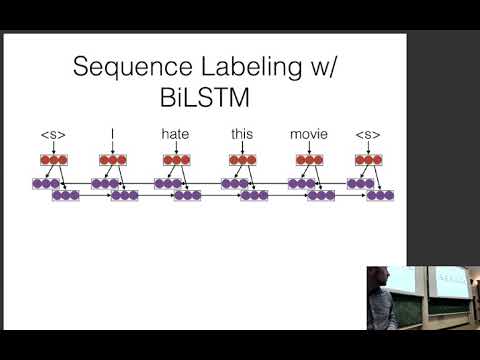

Explore structured prediction basics in this lecture from CMU's Neural Networks for NLP course. Delve into the Structured Perceptron algorithm, structured max-margin objectives, and simple remedies to exposure bias. Learn about various types of prediction, the importance of modeling output interactions, and training methods for structured models. Examine local normalization, global normalization, and cost-augmented decoding for Hamming loss. Gain insights into sequence labeling, tagger considerations for output structure, and the challenges associated with structured hinge loss.

Neural Nets for NLP - Structured Prediction Basics

Add to list

#Computer Science

#Artificial Intelligence

#Neural Networks

#Natural Language Processing (NLP)

#Machine Learning

#Model Training

#Sequence Labeling