Description:

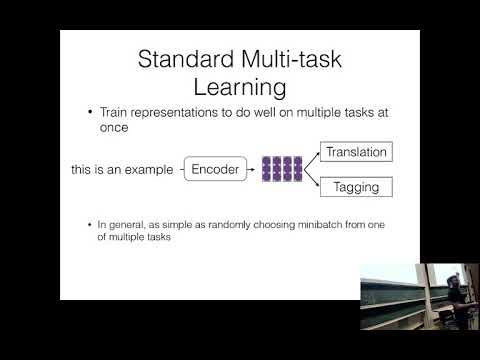

Explore multi-task and multi-lingual learning in natural language processing through this comprehensive lecture from CMU's Neural Networks for NLP course. Delve into key concepts like feature extraction, increasing data through multi-tasking, pre-training encoders, and regularization techniques. Examine various approaches to multi-task learning, including selective parameter adaptation, soft parameter tying, and supervised domain adaptation. Investigate multilingual structured prediction, sequence-to-sequence models, and the challenges of handling multiple annotation standards. Gain insights into designing effective multi-task and multi-lingual NLP systems through practical examples and design principles presented by Antonis Anastasopoulos.

Neural Nets for NLP - Multi-task, Multi-lingual Learning

Add to list

#Computer Science

#Artificial Intelligence

#Neural Networks

#Natural Language Processing (NLP)

#Machine Learning

#Multi-task Learning (MTL)