Description:

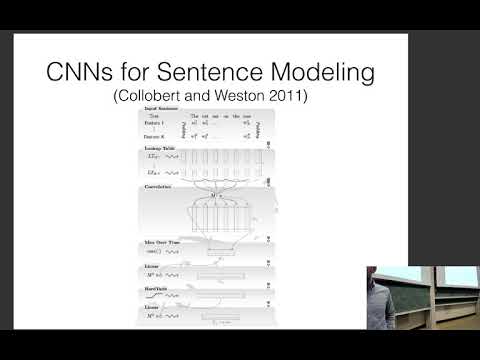

Explore convolutional neural networks for natural language processing in this comprehensive lecture from CMU's Neural Networks for NLP course. Dive into bag of words and n-grams concepts before examining various applications of convolution in language modeling. Learn about context windows, sentence modeling, and structured convolution techniques. Discover the power of stacked and dilated convolutions in processing linguistic data. Investigate convolutional models for analyzing sentence pairs. Gain insights into pooling strategies, non-linear activation functions, and their impact on language processing tasks. Enhance your understanding of advanced CNN architectures tailored for NLP applications through detailed explanations and practical examples.

Neural Nets for NLP 2019 - Convolutional Neural Networks for Language

Add to list

#Computer Science

#Artificial Intelligence

#Neural Networks

#Natural Language Processing (NLP)

#Machine Learning

#Bag of Words