Description:

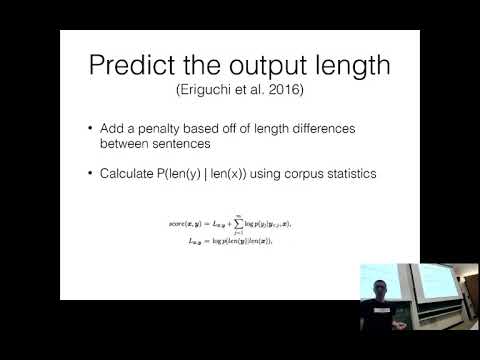

Explore advanced search algorithms for natural language processing in this lecture from CMU's Neural Networks for NLP course. Dive into beam search, A* search, and search with future costs. Learn about pruning methods, variable length output sequences, and techniques for improving diversity in top choices. Examine the challenges of larger beam sizes and strategies to address them. Discover applications in machine translation, generative parsing, and CCG parsing. Investigate methods like Monte Carlo Tree Search, actor-critic models, and particle filters for enhancing search performance. Gain insights into reranking techniques and the benefits and drawbacks of various search algorithms in NLP tasks.

Neural Nets for NLP 2019 - Advanced Search Algorithms

Add to list

#Computer Science

#Artificial Intelligence

#Neural Networks

#Natural Language Processing (NLP)

#Monte Carlo Tree Search