Description:

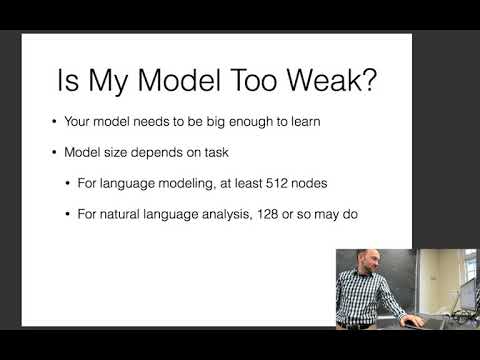

Explore techniques for debugging neural networks in natural language processing applications. Learn to identify and address common issues such as training time problems, model weakness, optimization challenges, and overfitting. Discover strategies for tuning hyperparameters, initializing weights, and managing minibatches. Examine the importance of data analysis, quantitative evaluation, and the relationship between loss functions and evaluation metrics. Gain insights into early stopping, dropout, and other techniques to improve model performance. Understand the complexities of search and decoding in NLP tasks, and learn how to reproduce previous research results effectively.

Neural Nets for NLP - Debugging Neural Nets for NLP

Add to list

#Computer Science

#Artificial Intelligence

#Neural Networks

#Natural Language Processing (NLP)

#Data Science

#Data Analysis

#Quantitative Analysis

#Machine Learning

#Overfitting

#Loss Functions