Description:

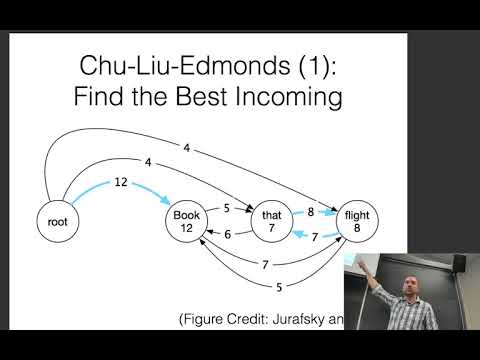

Explore parsing with dynamic programs in this lecture from CMU's Neural Networks for NLP course. Delve into graph-based parsing, minimum spanning tree parsing, and structured training techniques. Learn about dynamic programming methods for phrase structure parsing and reranking approaches. Examine algorithms like Tulio Edmunds and IceNurse, and understand the transition from traditional to neural models. Investigate global probabilistic training, CKY and Viterbi algorithms, and Conditional Random Fields (CRFs) for parsing. Gain insights into neural CRFs, structured inference, and recursive neural networks for parsing tasks.

Neural Nets for NLP 2017 - Parsing With Dynamic Programs

Add to list

#Computer Science

#Artificial Intelligence

#Neural Networks

#Natural Language Processing (NLP)

#Algorithms

#Dynamic programming