Description:

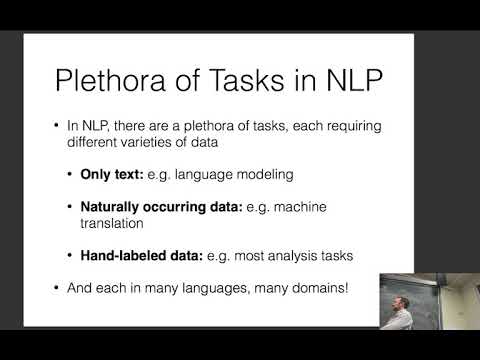

Explore multilingual and multitask learning in neural networks for natural language processing through this 52-minute lecture by Graham Neubig. Delve into key concepts such as multitask learning, domain adaptation, and multilingual learning. Gain insights on increasing data through multitask approaches, pre-training encoders, and regularization techniques. Examine supervised and unsupervised domain adaptation methods, multilingual inputs and outputs, and teacher-student networks for multilingual adaptation. Understand various types of multi-tasking and multiple annotation standards in NLP tasks. Access accompanying slides and related course materials for a comprehensive learning experience in advanced NLP techniques.

Neural Nets for NLP 2017 - Multilingual and Multitask Learning

Add to list

#Computer Science

#Artificial Intelligence

#Neural Networks

#Natural Language Processing (NLP)

#Machine Learning

#Domain Adaptation