Description:

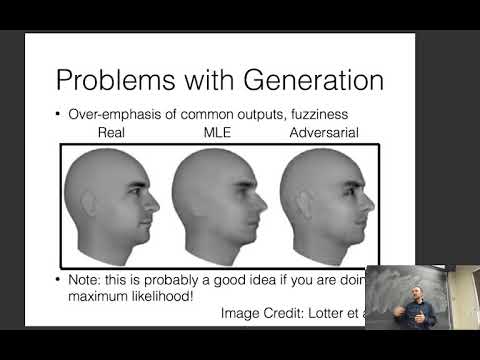

Explore adversarial learning in neural networks for natural language processing in this lecture from CMU's CS 11-747 course. Dive into generative adversarial networks (GANs), examining their application to both features and outputs in NLP tasks. Learn about the challenges of using GANs with discrete outputs and discover techniques for overcoming these obstacles. Investigate the use of adversarial training methods for domain adaptation, multi-task learning, and unsupervised style transfer in text. Gain insights into stabilization tricks and innovative applications like GAN-based data cleaning. Enhance your understanding of advanced NLP concepts through practical examples and theoretical explanations provided by Professor Graham Neubig.

Neural Nets for NLP 2017 - Adversarial Learning

Add to list

#Computer Science

#Artificial Intelligence

#Neural Networks

#Natural Language Processing (NLP)

#Data Science

#Data Analysis

#Data Cleaning