Description:

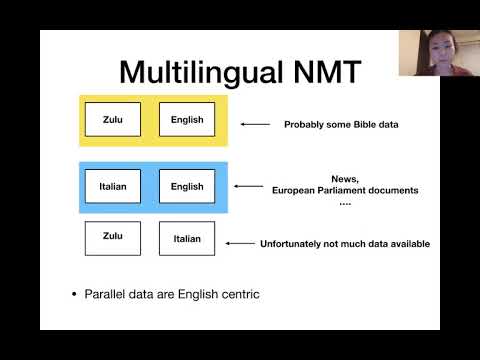

Explore methods for training multilingual systems, zero-shot adaptation, and open problems in multilingual learning in this 40-minute lecture from CMU's CS11-737 "Multilingual Natural Language Processing" course. Delve into topics such as cross-lingual transfer, rapid adaptation of massive multilingual models, meta-learning for multilingual training, and improving zero-shot neural machine translation. Examine challenges like training data imbalance, underperformance of multilingual models compared to bilingual ones, and issues with one-to-many transfer. Learn about techniques including heuristic sampling of data, multilingual knowledge distillation, and adding language-specific layers. Gain insights into the complexities of supporting multiple languages and addressing the needs of underrepresented languages in NLP.

CMU Multilingual NLP 2020 - Multilingual Training and Cross-Lingual Transfer

Add to list

#Computer Science

#Artificial Intelligence

#Natural Language Processing (NLP)

#Machine Learning

#Meta-Learning

#Multilingual Natural Language Processing