Description:

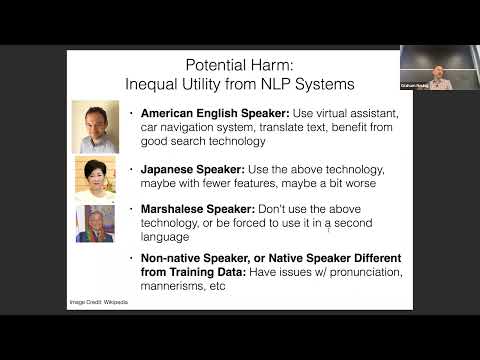

Explore the critical topic of bias and fairness in Natural Language Processing (NLP) through this comprehensive lecture from CMU's Advanced NLP course. Delve into various types of bias present in NLP models and learn effective strategies for bias prevention. Examine allocational harm, stereotyping, and biases in human annotation. Discover bias detection techniques, including word embedding association tests and null hypothesis testing. Analyze bias in word and sentence embeddings, error rates, and language disparities across different cities. Investigate counterfactual evaluation methods and explore mitigation strategies such as feature representation, bias sentence embeddings, and data augmentation techniques. Gain valuable insights into the current landscape of bias research in NLP and its implications for developing fair and equitable language models.

CMU Advanced NLP: Bias and Fairness

Add to list

#Computer Science

#Artificial Intelligence

#Natural Language Processing (NLP)

#Machine Learning

#Word Embeddings

#Data Augmentation

#Ethics in AI

#Fairness in AI

#Sentence Embedding