Description:

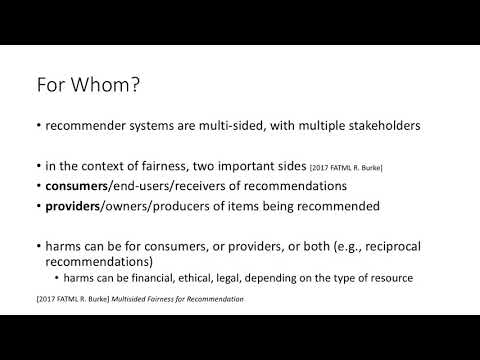

Explore techniques for building user trust in recommender systems through fairness and explanations in this 27-minute conference talk from UMAP'20. Delve into the ethical implications of AI-driven decision-making, examining fairness aspects such as non-discrimination, diversity awareness, and bias elimination. Learn about explanation approaches that provide human-understandable interpretations of complex systems. Discover how fairness and explanations can work together to promote trust, considering the perspectives of various stakeholders with different technical backgrounds. Gain insights into the taxonomy of fairness in recommendations, white box vs. black-box models, local proxy models, and counterfactual explanations. Understand the importance of fairness-aware explanations and their role in building trustworthy AI systems that impact everyday life decisions.

Building User Trust in Recommendations via Fairness and Explanations

Add to list

#Conference Talks

#ACM SIGCHI

#Computer Science

#Machine Learning

#Recommender Systems

#Artificial Intelligence

#AI Ethics

#Ethics in AI

#Fairness in AI