Description:

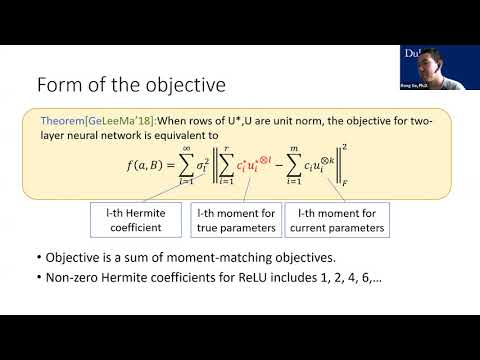

Explore tensor decomposition and over-parameterization in this 37-minute conference talk from the Fields Institute's Mini-symposium on Low-Rank Models and Applications. Delve into the comparison between lazy training regimes and gradient descent techniques for finding approximate tensors. Examine the challenges of analyzing gradient descent, the failures of lazy training, and the existence of local minima. Learn about a novel algorithm that escapes local minima through random correlation and amplifies initial correlation using tensor power methods. Gain insights into the importance of over-parameterization in training neural networks and its implications for avoiding bad local optimal solutions.

Beyond Lazy Training for Over-parameterized Tensor Decomposition

Add to list

#Mathematics

#Algebra

#Linear Algebra

#Tensor Decomposition

#Computer Science

#Machine Learning

#Artificial Intelligence

#Neural Networks

#Gradient Descent

#Implicit Regularization