Description:

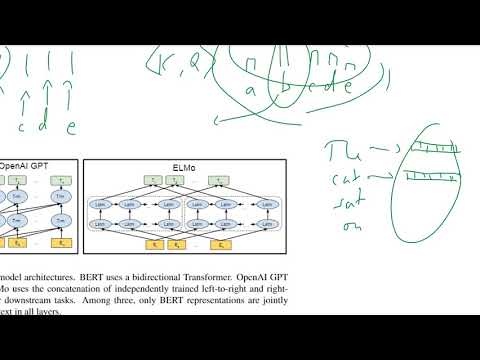

Explore a comprehensive video analysis of the groundbreaking BERT language representation model, which revolutionized natural language processing tasks. Delve into the intricacies of bidirectional encoder representations from transformers, understanding how BERT's pre-training on both left and right context enables state-of-the-art performance across various language tasks. Examine the model's architecture, including attention mechanisms, masked language modeling, and pre-trained language modeling. Compare BERT to other models, discuss its limitations, and learn how it achieves remarkable improvements in question answering, language inference, and other NLP benchmarks. Gain insights into the work of Jacob Devlin, Ming-Wei Chang, Kenton Lee, and Kristina Toutanova, and discover how BERT's conceptually simple yet empirically powerful approach has pushed the boundaries of language understanding.

BERT- Pre-training of Deep Bidirectional Transformers for Language Understanding

Add to list

#Computer Science

#Artificial Intelligence

#Natural Language Processing (NLP)

#LLM (Large Language Model)

#BERT

#Deep Learning