Description:

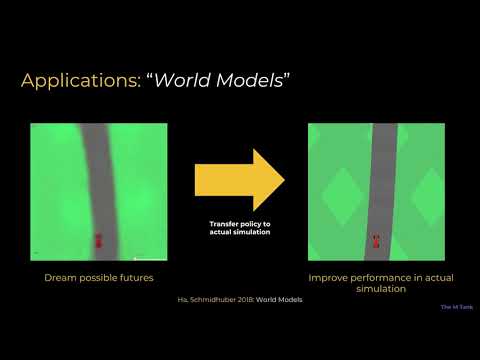

Dive into the world of Reinforcement Learning (RL) with this comprehensive talk by Ben Duffy. Explore the evolution of sequential decision making, from achieving superhuman performance in complex board games to solving 2D Atari and 3D games like Doom, Quake, and StarCraft. Gain insights into the pursuit of creating artificial general intelligence and understand the main breakthroughs, paradigms, formulations, and obstacles within RL. Learn about the agent-environment loop, core concepts such as state, reward, value functions, and policies, and delve into model-based and model-free RL approaches. Discover applications in robotics, language grounding, and multi-agent collaboration. Examine deep reinforcement learning techniques, including value function approximation and policy gradients, and their applications in various domains. Get up to speed with the current state of the field and its future directions in this informative one-hour lecture.

Introduction to Reinforcement Learning

Add to list

#Computer Science

#Machine Learning

#Reinforcement Learning

#Artificial Intelligence

#Engineering

#Robotics

#Humanities

#Games

#Board Games

#Deep Reinforcement Learning

#Policy Gradient

#Model Based Reinforcement Learning