Description:

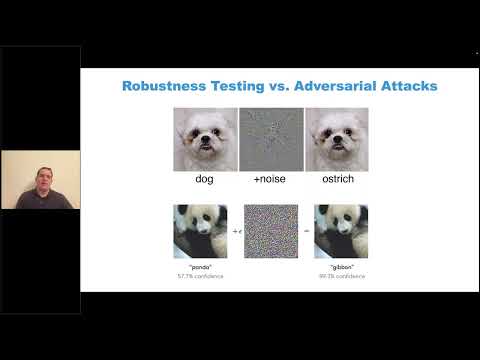

Learn practical techniques for building safe, fair, and reliable AI models in this 42-minute talk by David Talby, PhD. Address four common challenges in AI development: robustness, labeling errors, bias, and data leakage using open-source tools and real-world examples. Gain actionable strategies to enhance machine learning, NLP, and data science projects, ensuring AI systems perform safely and correctly in real-world scenarios. Explore tools for detecting and fixing labeling errors, testing model robustness, and addressing bias across critical groups. Discover methods to prevent data leakage, particularly when handling personally identifiable information. Perfect for data science practitioners and leaders looking to implement responsible AI practices in their work.

Applying Responsible AI with Open-Source Tools

Add to list

#Computer Science

#Artificial Intelligence

#Responsible AI

#Data Science

#Machine Learning

#AI Ethics