Description:

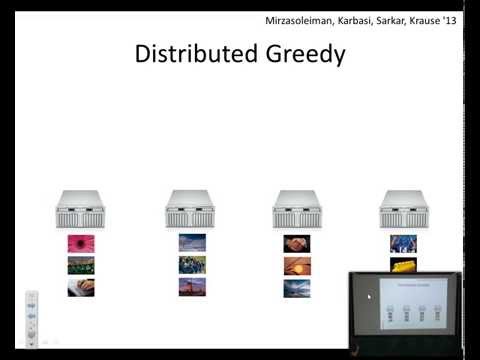

Explore the power of randomization in distributed submodular maximization for massive datasets in this 32-minute lecture by Alina Ene. Delve into the application of constrained submodular maximization in machine learning problems such as exemplar clustering, document summarization, and sensor placement. Learn about a simple, embarrassingly parallel distributed algorithm that achieves constant factor, worst-case approximation guarantees. Discover its efficiency in large-scale problems with various constraints, yielding results comparable to centralized settings. Examine topics including combinatorial optimization, submodularity, multimode sensor coverage, identifying representatives, and the need for parallelization. Gain insights into the greedy algorithm, its distributed performance, and the analysis of randomness in optimization. Investigate non-monotone functions and explore future directions in this field. The lecture, part of the Hausdorff Trimester Program on Combinatorial Optimization, also covers joint work with Rafael Barbosa, Huy Nguyen, and Justin Ward.

Read more

The Power of Randomization - Distributed Submodular Maximization on Massive Datasets

Add to list

#Computer Science

#Distributed Computing

#Distributed Algorithms

#Machine Learning

#Algorithms

#Algorithm Analysis

#Mathematics

#Combinatorial Optimization

#High Performance Computing

#Parallel Computing

#Parallelization