Description:

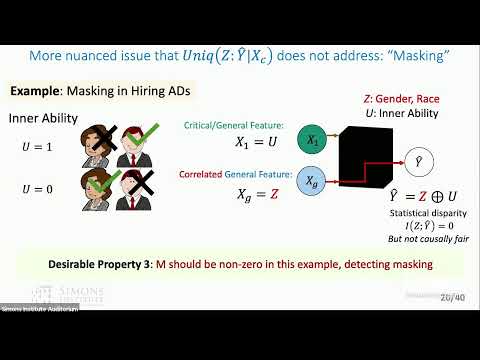

Explore the intersection of algorithmic fairness, causality, and information theory in this 37-minute lecture by Sanghamitra Dutta from JP Morgan AI Research. Delve into the complexities of identifying and explaining sources of disparity in machine learning models, particularly in high-stakes applications. Learn about a systematic measure of "non-exempt disparity" that combines concepts from information theory and causality. Discover how to quantify accuracy-fairness trade-offs using Chernoff Information. Gain insights into the challenges of resolving legal disputes and informing policies related to algorithmic bias, including the importance of distinguishing between disparities arising from occupational necessities versus other factors. Examine case studies, theorems, and simulations that illustrate these concepts, and understand the application of Partial Information Decomposition and causality in addressing fairness issues in AI.

Algorithmic Fairness From The Lens Of Causality And Information Theory

Add to list

#Computer Science

#Artificial Intelligence

#Algorithmic Fairness

#Machine Learning

#Information Theory

#Mathematics

#Statistics & Probability

#Causality