Description:

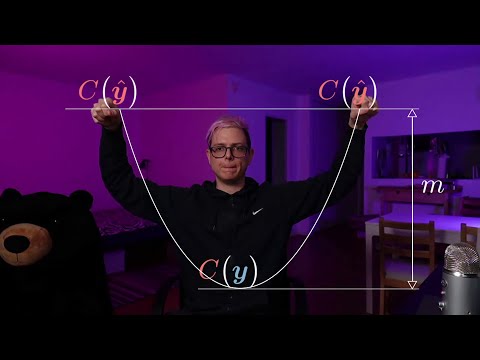

Explore autoencoders (AE), denoising autoencoders (DAE), variational autoencoders (VAE), and generative adversarial networks (GAN) in this comprehensive video lecture. Learn to implement these models using PyTorch, analyze their kernels, and understand their applications in generative modeling and image processing. Dive into practical code examples, compare different architectures, and grasp key concepts such as latent space interpolation, embedding distributions, and cost network design. Gain insights into state-of-the-art inpainting techniques and discover how these models function as energy-based models (EBM). Benefit from clear explanations, including an intuitive Italian vs. Swiss analogy for understanding GANs, and conclude with a hands-on PyTorch code reading session for GAN implementation.

AE, DAE, and VAE with PyTorch - Generative Adversarial Networks and Code

Add to list

#Computer Science

#Artificial Intelligence

#Neural Networks

#Generative Adversarial Networks (GAN)

#Deep Learning

#PyTorch

#Variational Autoencoder (VAE)