Description:

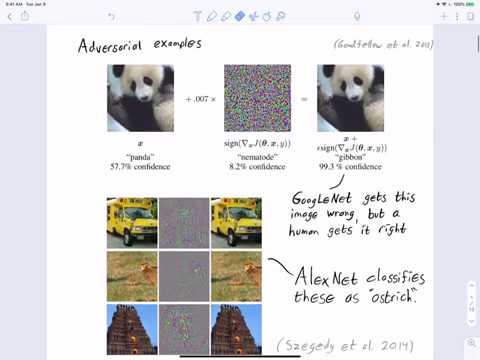

Explore adversarial examples for deep neural networks in this comprehensive lecture. Delve into white box attacks, black box attacks, real-world attacks, and adversarial training. Learn about Projected Gradient Descent, Fast Gradient Sign Method, Carlini-Wagner methods, Universal Adversarial Perturbations, Adversarial Patches, Transferability Attacks, and Zeroth Order Optimization. Examine challenges in physical world attacks and the concept of adversarial training. Access accompanying lecture notes for further study and explore referenced research papers to deepen understanding of this critical aspect of deep learning security.

Adversarial Examples for Deep Neural Networks

Add to list