Description:

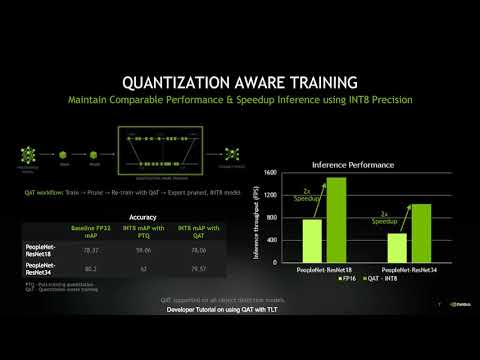

Discover how to accelerate the creation of vision AI models using NVIDIA Transfer Learning Toolkit and pre-trained models in this 47-minute video. Explore the wide adoption of vision AI across industries and learn how to overcome the challenges of training accurate and performant deep learning models. Dive into the Transfer Learning Toolkit, a simplified AI toolkit for developers to train models without coding. Examine pre-trained models available on NGC and learn how to fine-tune them for specific use cases. Explore techniques like model pruning and INT8 quantization to build high-performance models for inference, reducing development effort and speeding time to market. Cover topics such as quantization-aware training, automatic mixed precision, instance segmentation, and specific models like PeopleNet and Face Mask Detection. Walk through the training workflow, including data preparation, model specification, training, evaluation, and deployment using DeepStream.

Accelerating Vision AI Applications Using NVIDIA Transfer Learning Toolkit and Pre-Trained Models

Add to list

#Computer Science

#Machine Learning

#Transfer Learning

#Deep Learning

#Social Sciences

#Urban Planning

#Smart Cities

#Pre-trained Models