Description:

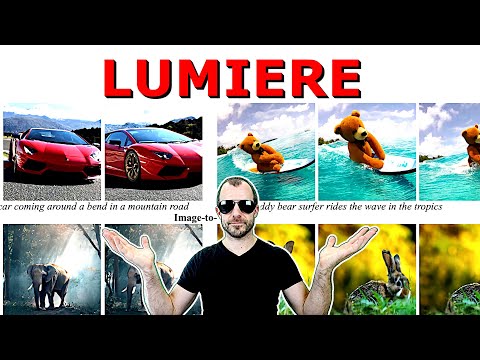

Explore a detailed explanation of Google Research's Lumiere, a groundbreaking text-to-video diffusion model designed to generate realistic and coherent motion in synthesized videos. Dive into the innovative Space-Time U-Net architecture that enables the creation of entire video durations in a single pass, overcoming limitations of existing keyframe-based approaches. Learn about the model's ability to process videos at multiple space-time scales, its state-of-the-art performance in text-to-video generation, and its versatility in various content creation tasks. Examine the technical aspects, including temporal down- and up-sampling, leveraging pre-trained text-to-image models, and applications such as image-to-video conversion, video inpainting, and stylized generation. Gain insights into the training, evaluation, and potential societal impacts of this cutting-edge technology in the field of AI-driven video synthesis.

Lumiere: Space-Time Diffusion Model for Video Generation

Add to list

#Computer Science

#Machine Learning

#Diffusion Models

#Artificial Intelligence

#Deep Learning

#Computer Vision

#Image Processing

0:00 / 0:00