Description:

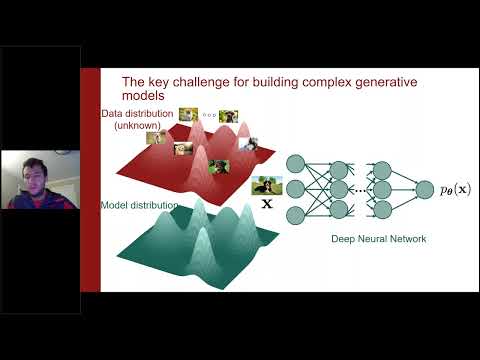

Explore the cutting-edge world of diffusion-based generative modeling in this 48-minute conference talk by Stanford University Associate Professor Stefano Ermon. Dive into the technical aspects of score-based generative models, which have revolutionized image generation quality. Learn how these models differ from traditional approaches by directly learning the vector field of gradients of the data distribution. Discover the advantages of this framework, including flexible architectures, no sampling requirements during training, and the absence of adversarial training methods. Understand how these models enable exact likelihood evaluation, achieve state-of-the-art sample quality, and enhance performance in various inverse problems, particularly in medical imaging. Follow along as Ermon covers topics such as data simulators, score functions, random projections, noise conditioning, and annealing dynamics. Gain insights into practical applications like stroke painting, language-guided generation, and molecular conformation prediction.

Read more

Denoising Diffusion-Based Generative Modeling

Add to list

#Computer Science

#Machine Learning

#Diffusion Models

#Health & Medicine

#Health Care

#Radiology

#Medical Imaging

0:00 / 0:00