Description:

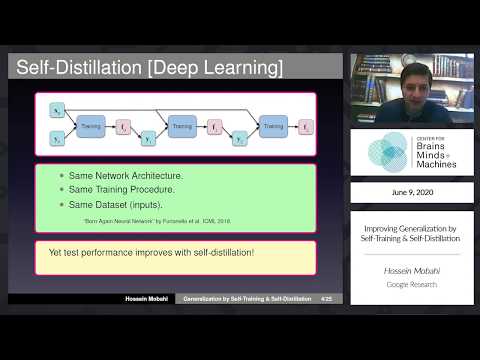

Explore the concept of self-training and self-distillation in machine learning through this 44-minute lecture by Hossein Mobahi from Google Research. Delve into the surprising phenomenon where retraining models using their own predictions can lead to improved generalization performance. Examine the regularization effects induced by this process and their amplification through multiple rounds of retraining. Investigate the rigorous characterization of these effects in Hilbert space learning, and its relation to infinite-width neural networks. Cover topics such as unconstrained form, closed-form solutions, power iteration analogy, capacity control, and generalization guarantees. Analyze deep learning experiments and discuss open problems in the field of self-training and self-distillation.

Improving Generalization by Self-Training & Self Distillation

Add to list

#Computer Science

#Deep Learning

#Machine Learning

#Supervised Learning

#Artificial Intelligence

#Neural Networks

#Mathematics

#Functional Analysis

#Hilbert Spaces

0:00 / 0:00