Description:

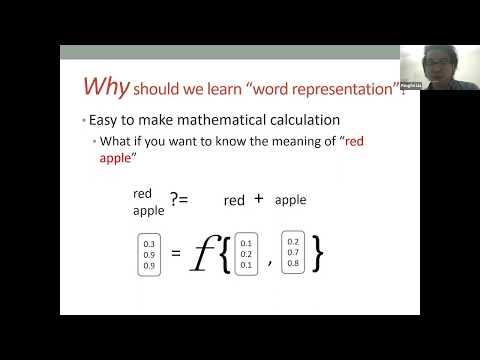

Explore neural representation learning in natural language processing through this comprehensive lecture from the CMU Low Resource NLP Bootcamp 2020. Delve into various methods for learning neural representations of language, covering topics such as word and sentence representations, supervised and unsupervised learning approaches, and case studies on NNLM, Glove, ELMO, and BERT. Gain insights into different structural biases, clusters of approaches, and must-know points about RNN, CNN, and Transformer models. Learn when to use non-contextualized versus contextualized representations and understand the importance of software, models, and corpora in neural representation learning for NLP.

CMU Low Resource NLP Bootcamp 2020 - Neural Representation Learning

Add to list

#Computer Science

#Artificial Intelligence

#Natural Language Processing (NLP)

#LLM (Large Language Model)

#BERT

0:00 / 0:00