Description:

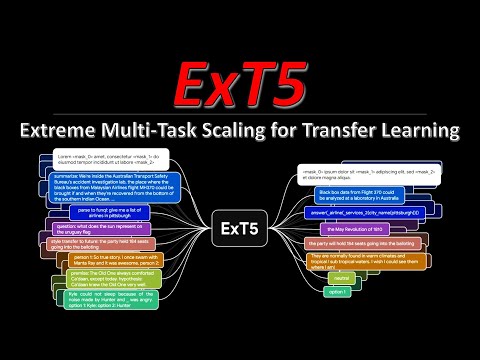

Explore an in-depth analysis of the ExT5 model, which pushes the limits of T5 by pre-training on 107 different supervised NLP tasks using the ExMix dataset. Learn about the model's architecture, task formulations, and performance compared to T5 baselines. Discover insights on multi-task scaling, co-training transfer among task families, and the impact of self-supervised data in pre-training. Gain understanding of the ExT5's improved performance on various NLP tasks and its enhanced sample efficiency during pre-training. This comprehensive video covers topics such as task selection, pre-training vs. pre-finetuning, and experimental results, providing valuable insights for researchers and practitioners in the field of natural language processing and transfer learning.

Towards Extreme Multi-Task Scaling for Transfer Learning

Add to list

#Computer Science

#Machine Learning

#Transfer Learning

#Multi-task Learning (MTL)

#Self-supervised Learning

#Fine-Tuning

0:00 / 0:00