Description:

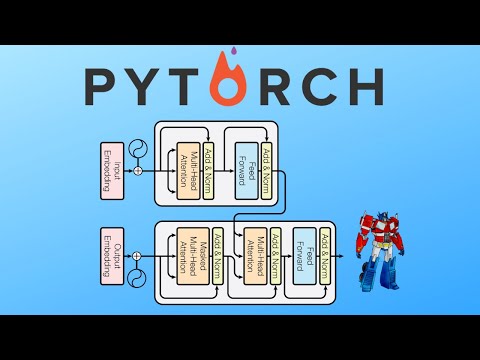

Dive into a comprehensive 57-minute video tutorial on implementing PyTorch Transformers from scratch, based on the groundbreaking "Attention is all you need" paper. Explore the original transformer architecture, starting with a detailed paper review and progressing through key components such as the attention mechanism, transformer blocks, encoder, and decoder. Learn how to assemble these elements to create a complete Transformer model, and gain practical insights through a small example and error-fixing session. Benefit from additional resources, including recommended courses and free materials, to further enhance your understanding of machine learning, deep learning, and natural language processing.

Pytorch Transformers from Scratch - Attention Is All You Need

Add to list

#Computer Science

#Deep Learning

#PyTorch

#Machine Learning

#Attention Mechanisms

#Transformer Models

#Encoder-Decoder Architecture

0:00 / 0:00