Description:

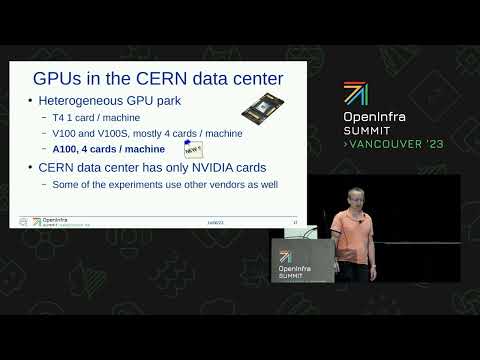

Learn about CERN's implementation of Multi Instance GPU (MIG) capabilities in a 28-minute conference talk that explores the deployment of NVIDIA A100 GPUs in their private cloud infrastructure. Discover various deployment models including PCI passthrough and virtual GPUs, understanding their advantages and challenges in supporting diverse applications from machine learning to proton beam simulations. Gain insights into how CERN manages centrally managed GPU resources to serve their user community, with detailed explanations of different deployment approaches and their final chosen model. Follow along as speaker Ulrich Schwickerath breaks down the technical aspects of GPU provisioning, stability considerations, and future development plans for CERN's cloud infrastructure.

Multi-Instance GPU Deployment for Machine Learning and Particle Beam Simulations at CERN

Add to list

#Computer Science

#High Performance Computing

#Parallel Computing

#GPU Computing

#Machine Learning

#Programming

#Cloud Computing

#OpenStack

#Science

#Physics

#Particle Physics

0:00 / 0:00