Description:

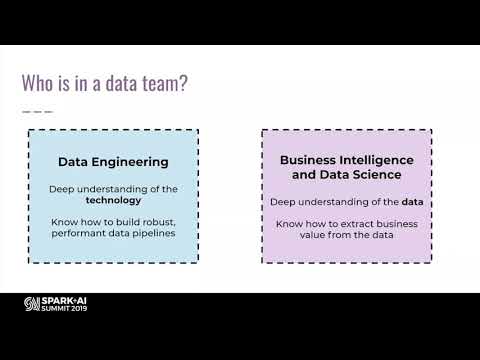

Explore best practices for building and deploying data pipelines in Apache Spark in this 41-minute conference talk by Vicky Avison from Databricks. Learn about key considerations such as performance, idempotency, reproducibility, and tackling the small file problem when constructing data pipelines. Discover a common Data Engineering toolkit that separates production concerns from business logic, enabling non-Data-Engineers to define pipelines efficiently. Examine Waimak, an open-source library for Apache Spark, which streamlines the transition from prototype to production. Gain insights into new approaches and best practices for deploying data pipelines, an often overlooked aspect of Data Engineering. Understand the composition of data teams, challenges in pipeline development, and strategies for leveraging team skills effectively. Explore tools, frameworks, and design principles for creating a robust Data Engineering framework, along with simplified methods for data ingestion, business logic development, environment management, and deployments.

Read more

Best Practices for Building and Deploying Data Pipelines in Apache Spark

Add to list

#Data Science

#Big Data

#Apache Spark

#Data Engineering

#Data Pipelines

#Data Ingestion

#Programming

#Web Development

#API Design

#Idempotency

0:00 / 0:00