Description:

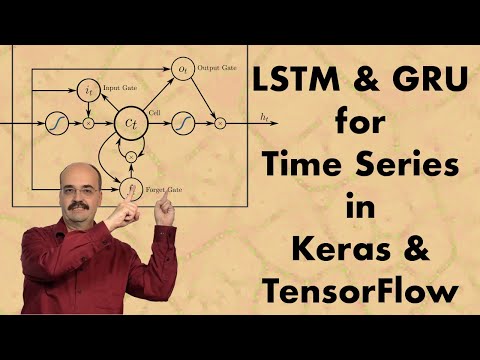

Explore Long Short Term Memory (LSTM) and Gated Recurrent Units (GRU) in this 28-minute video tutorial. Learn how these layer types are used to build recurrent neural networks in Keras, laying the groundwork for Natural Language Processing (NLP) and time series prediction. Dive into LSTM diagrams, internals, and sigmoid functions before comparing LSTM to GRU. Follow along with practical examples, including an LSTM implementation and a sunspots prediction model. Access accompanying code on GitHub and discover additional resources to further your deep learning journey.

Programming LSTM with Keras and TensorFlow

Add to list

#Computer Science

#Artificial Intelligence

#Neural Networks

#Recurrent Neural Networks (RNN)

#Long short-term memory (LSTM)

#Machine Learning

#TensorFlow

#Deep Learning

#Keras

0:00 / 0:00