Description:

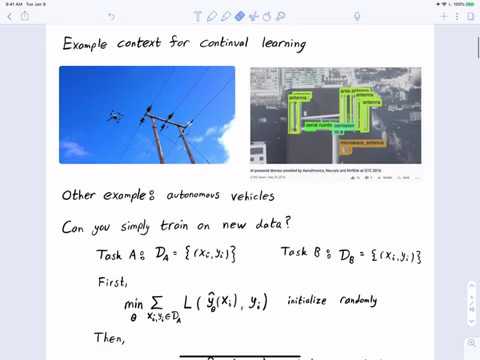

Explore continual learning and catastrophic forgetting in deep neural networks through this 42-minute lecture. Delve into the context, evaluation methods, and algorithms based on regularization, dynamic architectures, and Complementary Learning Systems. Examine data permutation tasks, incremental task learning, multimodal learning, Learning without Forgetting algorithm, Elastic Weight Consolidation, Progressive Neural Networks, and Generative replay. Gain insights from Northeastern University's CS 7150 Deep Learning course, with references to key research papers in the field. Access accompanying lecture notes for a comprehensive understanding of this crucial topic in machine learning.

Continual Learning and Catastrophic Forgetting

Add to list

0:00 / 0:00