Description:

Save Big on Coursera Plus. 7,000+ courses at $160 off. Limited Time Only!

Grab it

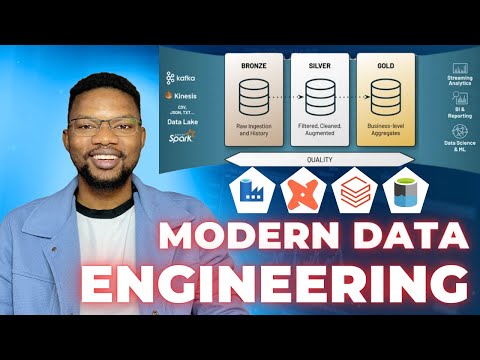

Embark on a comprehensive end-to-end data engineering project in this nearly two-hour video tutorial. Learn to build robust data pipelines using Apache Spark, Azure Databricks, and Data Build Tool (DBT) with Azure as the cloud provider. Follow along as the instructor guides you through data ingestion into a lakehouse, data integration with Azure Data Factory, and data transformation using Databricks and DBT. Gain hands-on experience setting up resource groups, implementing medallion architecture, configuring Azure Key Vault for secure secret management, and orchestrating data pipelines. Explore the integration of Azure Databricks with Key Vault and Data Factory, and dive into DBT setup, configuration, and advanced features like snapshots and data marts. By the end of this tutorial, you'll have a solid understanding of modern data engineering practices and be equipped to build scalable, efficient data pipelines in the cloud.

Building Robust Data Pipelines for Modern Data Engineering - End-to-End Project

Add to list

#Data Science

#Data Engineering

#Big Data

#Apache Spark

#Programming

#Cloud Computing

#Microsoft Azure

#Azure Storage

#Azure Data Factory

#Azure Key Vault

#Data Pipelines

#Azure Databricks

#Medallion Architecture

0:00 / 0:00