Description:

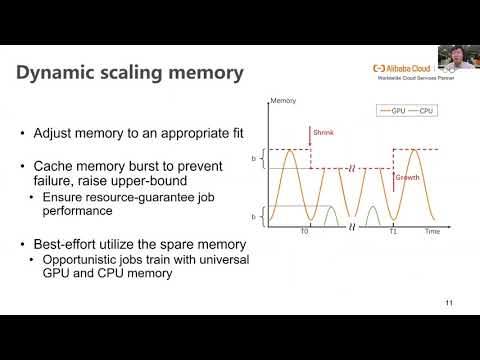

Explore a conference talk on AntMan, a deep learning infrastructure designed to efficiently manage and scale GPU resources for complex deep learning workloads. Discover how this system, deployed at Alibaba, improves GPU utilization by dynamically scaling memory and computation within deep learning frameworks. Learn about the co-design of cluster schedulers with deep learning frameworks, enabling multiple jobs to share GPU resources without compromising performance. Gain insights into how AntMan addresses the challenges of fluctuating resource demands in deep learning training jobs, resulting in significant improvements in GPU memory and computation unit utilization. Understand the unique approach to efficiently utilizing GPUs at scale, which has implications for job performance, system throughput, and hardware utilization in large-scale deep learning environments.

AntMan - Dynamic Scaling on GPU Cluster for Deep Learning

Add to list

#Conference Talks

#OSDI (Operating Systems Design and Implementation)

#Computer Science

#Memory Management

#Programming

#Software Development

#Software Testing

#Performance Testing

#Benchmarking

0:00 / 0:00