Description:

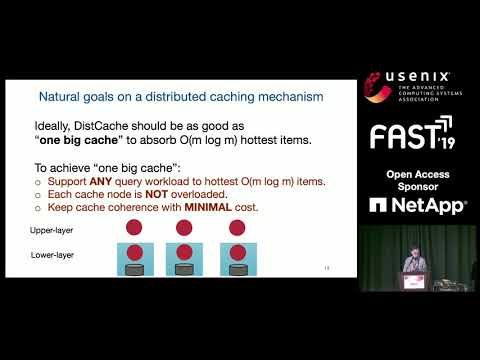

Explore a groundbreaking approach to load balancing in large-scale storage systems through this award-winning conference talk. Delve into DistCache, a novel distributed caching mechanism that offers provable load balancing for expansive storage infrastructures. Learn how this innovative solution co-designs cache allocation with cache topology and query routing, partitioning hot objects using independent hash functions across multiple cache layers. Discover the theoretical foundations behind DistCache, including techniques from expander graphs, network flows, and queuing theory. Examine the design challenges faced and their solutions, such as cache allocation strategies and efficient query routing. Gain insights into the implementation of DistCache, particularly in the context of switch-based caching, and understand its potential applications in various storage systems. Analyze the evaluation setup and key takeaways that demonstrate DistCache's ability to linearly scale cache throughput with the number of cache nodes.

Read more

DistCache - Provable Load Balancing for Large-Scale Storage Systems with Distributed Caching

Add to list

#Conference Talks

#FAST (File and Storage Technologies)

#Computer Science

#Distributed Systems

#Mathematics

#Graph Theory

#Expander Graphs

#Queuing Theory

#Load Balancing

#Cryptography

#Hash Functions

0:00 / 0:00