Description:

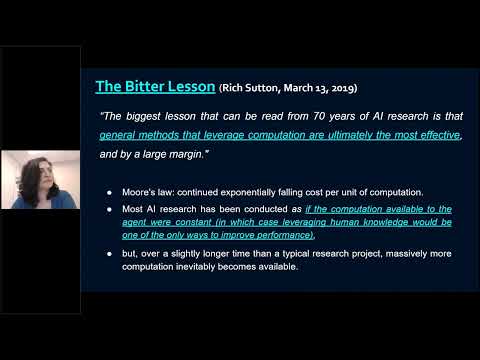

Explore recent advances in foundation models through this 36-minute conference talk by Dr. Irina Rish. Delve into scaling laws, emergent behaviors, and AI democratization, focusing on large-scale, self-supervised pre-trained models like GPT-3, GPT-4, and ChatGPT. Discover how neural scaling laws impact model performance prediction and AI safety. Learn about collaborative efforts by universities and non-profits to make cutting-edge AI technology accessible. Examine challenges and solutions in obtaining large compute resources for academic and non-profit AI research, emphasizing the importance of open-source foundation models. Topics covered include building AGI, generalization in AI, the neural scaling revolution, successes of large-scale models, history of neural scaling laws, and future directions in the field.

Recent Advances in Foundation Models: Scaling Laws, Emergent Behaviors, and AI Democratization

Add to list

#Computer Science

#Artificial Intelligence

#Foundation Models

#Machine Learning

#Self-supervised Learning

#Scaling Laws

0:00 / 0:00